Gemma 3 QAT Technical Guide: Google's Latest Quantization-Aware Training Explained | Revolutionary FP16-Level Performance

At the cybernetic frontier of AI computation, Google’s Gemma 3 QAT (Quantization-Aware Training) revolutionizes traditional quantization limitations with its quantization-aware training technology, reducing the memory footprint of the 27B parameter model from 54GB to 14.1GB while maintaining inference capabilities close to FP16. Compared to traditional Post-Training Quantization (PTQ), QAT significantly enhances quantized model performance stability by simulating low-precision computations during training. This model isn’t just a pioneer in edge computing; it’s the neural hub for multimodal tasks. Through tabulated model parameters, this article dissects the technical differences between QAT and conventional quantization, guiding tech enthusiasts through this pinnacle of neural network optimization.

QAT vs. Conventional Quantization: Neural Optimization’s Nuclear Reaction

Quantization-Aware Training (QAT) is the core technology of Gemma 3 QAT, fundamentally differing from Post-Training Quantization (PTQ) in its “proactive” optimization strategy. While PTQ directly maps FP16 weights to lower bits (like int4/int8) after training, often resulting in significant accuracy loss, QAT naturally adapts the model to low-precision computation environments by introducing quantization noise and dynamically adjusting weights and activation values during training. Here are the key differences between QAT and PTQ:

| Feature | QAT (Quantization-Aware Training) | PTQ (Post-Training Quantization) |

|---|---|---|

| Quantization Timing | Real-time low-precision simulation during training | Static weight mapping after training |

| Accuracy Loss | Close to FP16 (loss less than 1%) | Significant (5-10% or higher) |

| Training Overhead | Additional quantization noise modeling, increased training time | No additional training, direct quantization |

| Weight Optimization | Dynamic weight distribution adjustment, reduced quantization error | Static pruning, error accumulation |

| Use Cases | Edge devices, resource-constrained environments | Quick deployment, lower performance requirements |

| Gemma 3 Performance | 27B model with int4 rivals Gemini-1.5-Pro | PTQ models show degraded performance on complex tasks |

QAT implementation includes:

- Pseudo Quantization Nodes: During training,

FP16operations are dynamically mapped toint4/int8, with quantization errors optimized through gradient feedback, significantly reducing accuracy loss. - Mixed Precision Training: Combines

FP16and low-bit operations, ensuring numerical stability with post-quantization performance gap controlled within 1%. - Weight Pruning and Sparsification: Through Structured Pruning, redundant neurons are removed, further compressing the model and accelerating matrix operations.

The results are stunning: The 27B model’s memory requirement drops from 54GB (FP16) to 14.1GB (int4), with inference latency reduced by approximately 2.5x, while still challenging Gemini-1.5-Pro on the LMSys Chatbot Arena. The 1B model, at an extreme size of 529MB, enables microsecond-level inference on edge devices, demonstrating QAT’s overwhelming advantages in resource efficiency and performance retention.

Model Parameters and Details: Tabulated Overview

The following tables detail Gemma 3 QAT’s model parameters, architectural details, and QAT optimization features:

Model Parameters

| Parameter Scale | 1B | 4B | 12B | 27B |

|---|---|---|---|---|

| Parameters | 1 billion | 4 billion | 12 billion | 27 billion |

| Context Window | 32K tokens | 128K tokens | 128K tokens | 128K tokens |

| Modality Support | Text | Text + Image | Text + Image | Text + Image |

| Visual Encoder | None | SigLIP (ViT-based, 896x896) | SigLIP (ViT-based, 896x896) | SigLIP (ViT-based, 896x896) |

Memory Usage (FP16) | ~2GB | ~8GB | ~24GB | ~54GB |

Memory Usage (int4 QAT) | 529MB | ~2.1GB | ~6.2GB | ~14.1GB |

| Quantization Format | int4, int8 (GGUF, AWQ) | int4, int8 (GGUF, AWQ) | int4, int8 (GGUF, AWQ) | int4, int8 (GGUF, AWQ) |

Inference Latency (A100 40GB, int4) | ~10ms (single sentence) | ~20ms (single sentence) | ~50ms (single sentence) | ~100ms (single sentence) |

| Recommended Hardware | CPU, Mobile (Android/Web) | RTX 3060, TPU v4 | A100 40GB, TPU v4 | A100 80GB, TPU v5 |

| Task Performance (Examples) | Text generation, Code completion | VQA, Document analysis | Code generation, Chart understanding | Mathematical reasoning, Multimodal dialogue |

Architecture and Optimization

| Architecture & Optimization | Description | Technical Details |

|---|---|---|

| Attention Mechanism | Hybrid attention (Local + Global) | Local:Global layer ratio 5:1, sliding window 1024 tokens, 40% KV cache reduction |

KV Cache Optimization | Sparse cache + Dynamic compression | Halved cache usage in 128K context, GQA (Grouped-Query Attention) 1.8x speedup |

| Embedding Table Quantization | int4 quantized word embeddings and projection matrices | 20% memory reduction, accelerated forward propagation |

QAT Core Mechanism | Pseudo quantization + Mixed precision | Training-time int4/int8 operation simulation, gradient feedback weight optimization, accuracy loss less than 1% |

| Training Strategy | Knowledge distillation + Reinforcement learning | KL divergence loss distillation, RLHF/RLMF/RLEF alignment for math and code tasks |

| Hardware Acceleration | SIMD instruction set optimization | Supports AVX512, NEON, INT4 GEMM 3x inference speedup |

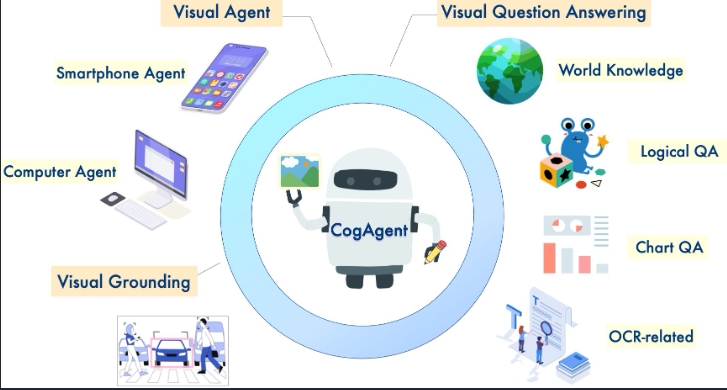

Multimodal Architecture: 128K Context Neural Matrix

Gemma 3 QAT builds on the Transformer architecture with deep optimizations for multimodal and long-context capabilities:

SigLIPVisual Encoder: EmploysVision Transformer(ViT), supporting 896x896 resolution images with Adaptive Windowing for high-resolution or non-square inputs. Visual and textual features are fused through cross-modal alignment, suitable for visual question answering (VQA) and document analysis (DocVQA).- Hybrid Attention Mechanism: Local-to-global attention layer ratio optimized to 5:1, sliding window reduced from 4096 to 1024, lowering key-value cache (

KV Cache) usage while maintaining 128K context performance. - Sequence Modeling: Combines grouped-query attention (

GQA) with multi-head attention (MHA) to enhance efficiency in long sequence tasks like codebase analysis.

Multimodal pre-training combines contrastive learning and masked language modeling, achieving SOTA on tasks like MMLU (multilingual), GSM8K/MATH (mathematics), and HumanEval (code generation). The 27B model approaches specialized model performance on tasks like ChartQA, while the 4B model provides an efficient alternative for resource-constrained scenarios.

QAT Performance Advantages: From Edge to Cloud

QAT’s “training-time quantization” strategy enables Gemma 3 QAT to significantly outperform PTQ models in various scenarios:

- Edge Devices: The

1Bmodel (529MB) runs offline onAndroid/Webwith latency as low as 10ms, ideal for privacy-sensitive applications (e.g., medical, financial).PTQmodels at the same size suffer up to 10% accuracy loss and struggle with complex tasks. - Long Context Tasks: In 128K context windows,

QATmodels achieve 40% lower memory usage and 1.8x faster inference throughKVcache optimization andGQA.PTQmodels tend to accumulate errors in long sequence tasks. - Multimodal Inference:

QAToptimizes visual-text modality alignment through pseudo quantization, with the27Bmodel approachingFP16performance onDocVQA, whilePTQmodels show unstable performance in image tasks.

Training and Optimization: Multi-level Neural Synergy

Gemma 3 QAT’s performance stems from several optimizations:

- Knowledge Distillation and Reinforcement Learning:

- Distillation from larger models (like

Gemini) usingKLdivergence loss and sequence-level alignment RLHF/RLMF/RLEFoptimization for mathematical reasoning and code generation, improvingMMLUscores by ~5%

- Distillation from larger models (like

- Key-Value Cache Optimization:

- Sparse

KVcache and dynamic compression, halving cache usage in 128K context GQAmechanism reduces attention computation overhead, suitable for long document analysis

- Sparse

- Hardware Adaptation:

- Weight optimization for

TPU/GPU/CPUSIMDinstruction sets (AVX512,NEON),INT4GEMM3x inference speedup - Integration with

llama.cpp,MLXframeworks for enhanced edge device efficiency

- Weight optimization for

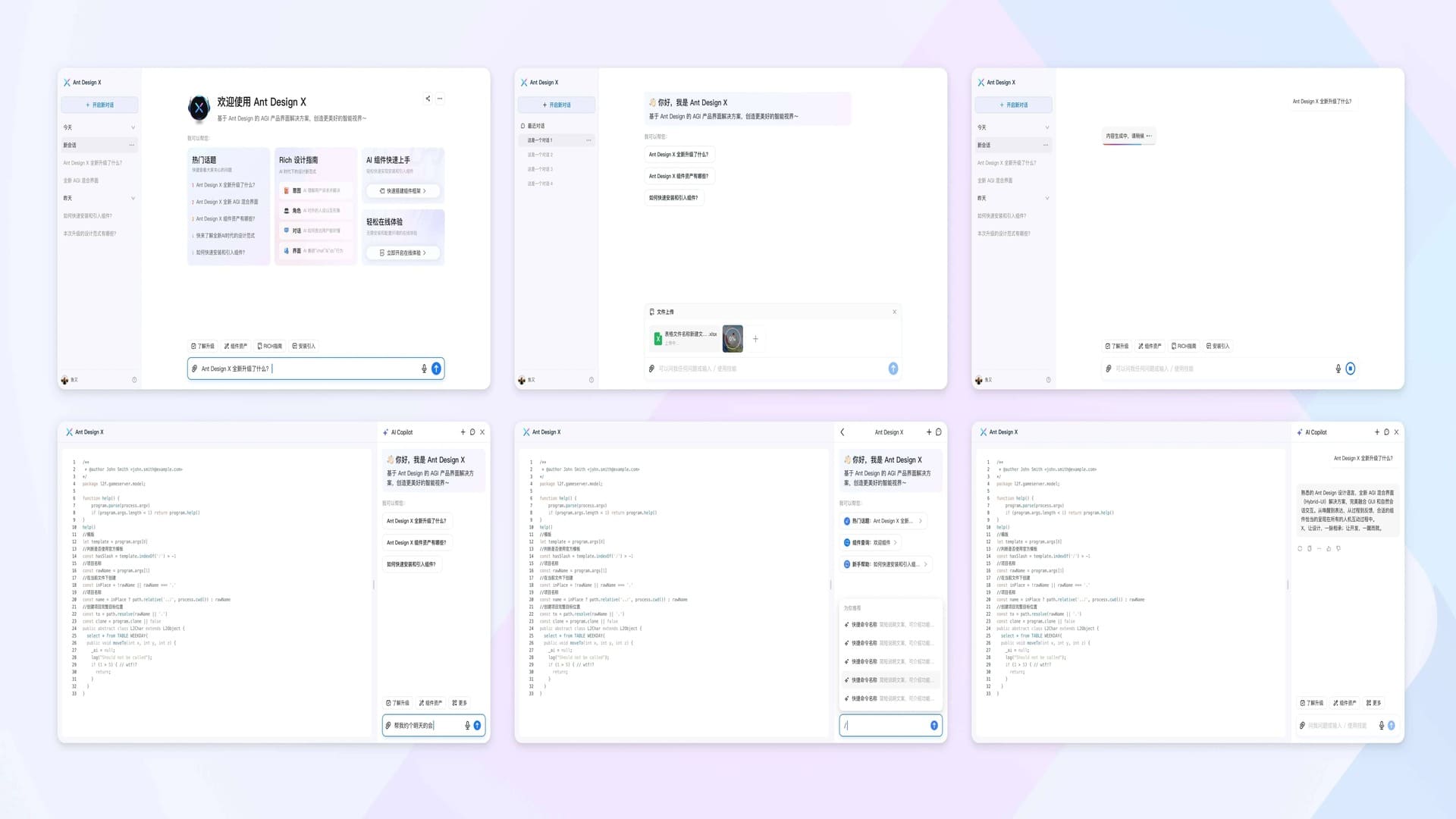

Ecosystem and Deployment: Open Neural Interface

Gemma 3 QAT’s open-source ecosystem provides seamless deployment:

- Framework Support:

Hugging Face Transformers,PyTorch,JAX,llama.cpp,MLX, with recommendedstduhpfQ4_0version - Deployment Paths: Weights available on

Hugging Face,Ollama,Kaggle, online testing throughGoogle AI Studio - Academic Support:

Gemma 3academic program providesGoogle Cloudcredits

Safety and Limitations

Gemma 3 QAT aligns with safety policies through data filtering, SFT, and RLHF, with violation rates below 0.1% in high-risk domains (e.g., CBRN). Limitations include:

- License Restrictions: Prohibited for training other models

- 1B Model: Text-only support, 32K context window, no multimodal capabilities

- Object Detection: Weak zero-shot object detection performance

Future Outlook: Neural Space Age of Edge AI

Gemma 3 QAT redefines the resource-performance boundary with QAT technology. The 1B model injects a “micro nuclear core” into edge devices, while the 27B model provides high-performance inference for cloud deployment. Looking ahead, neural compression and dynamic quantization will further reduce model sizes, driving AI adoption in IoT, 6G, and autonomous systems.

Further Reading

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)